Manus brings the dawn of AGI, and AI security has changed again

Manus achieved a SOTA (State-of-the-Art) score in the GAIA benchmark, showing that its performance exceeds that of Open AI's large-scale models of the same level. In other words, it can independently complete complex tasks, such as cross-border business negotiations, which involve breaking down contract terms, predicting strategies, generating solutions, and even coordinating legal and finance teams. Compared with traditional systems, Manus has the advantages of dynamic object disassembly, cross-modal reasoning, and memory-enhanced learning. It can break down large tasks into hundreds of executable subtasks, process multiple types of data at the same time, and use reinforcement learning to continuously improve its decision-making efficiency and reduce error rates.

In addition to marvelling at the rapid development of technology, Manus has once again sparked disagreement in the circle on the path of AI evolution: will AGI dominate the world in the future, or will MAS be synergistically dominant?

This starts with Manus' design philosophy, which implies two possibilities:

One is the AGI path. By continuously improving the level of individual intelligence, it is close to the comprehensive decision-making ability of human beings.

There is also the MAS path. As a super-coordinator, command thousands of vertical agents to work together.

On the surface, we are discussing different paths, but in fact we are discussing the underlying contradiction of AI development: how should efficiency and security be balanced? The closer the monolithic intelligence is to AGI, the higher the risk of black-box decision-making. However, although multi-agent collaboration can diversify risks, it may miss key decision-making windows due to communication delays.

The evolution of Manus has invisibly magnified the inherent risks of AI development. For example, data privacy black holes: in medical scenarios, Manus needs real-time access to patient genomic data; During financial negotiations, it may touch the company's undisclosed financial information; For example, the algorithmic bias trap, in which Manus gives below-average salary recommendations to candidates of a particular ethnicity in hiring negotiations; Nearly half of the terms of emerging industries are misjudged when legal contracts are reviewed. Another example is the adversarial attack vulnerability, in which the hacker implanted a specific voice frequency to make Manus misjudge the opponent's offer range during the negotiation.

We have to face a terrible pain point for AI systems: the smarter the system, the wider the attack surface.

However, security is a word that has been constantly mentioned in web3, and a variety of encryption methods have been derived from the framework of the impossible triangle of V God (blockchain networks cannot achieve security, decentralisation, and scalability at the same time):

-

Zero Trust Security Model: The core idea of the Zero Trust security model is "trust no one, always verify", meaning that devices should not be trusted by default, regardless of whether they are on an internal network or not. This model emphasises strict authentication and authorisation for each access request to ensure system security.

-

Decentralized Identity (DID): DID is a set of identifier standards that enable entities to be identified in a verifiable and persistent manner without the need for a centralised registry. This enables a new model of decentralised digital identity, often compared to self-sovereign identity, and is an important part of Web3.

-

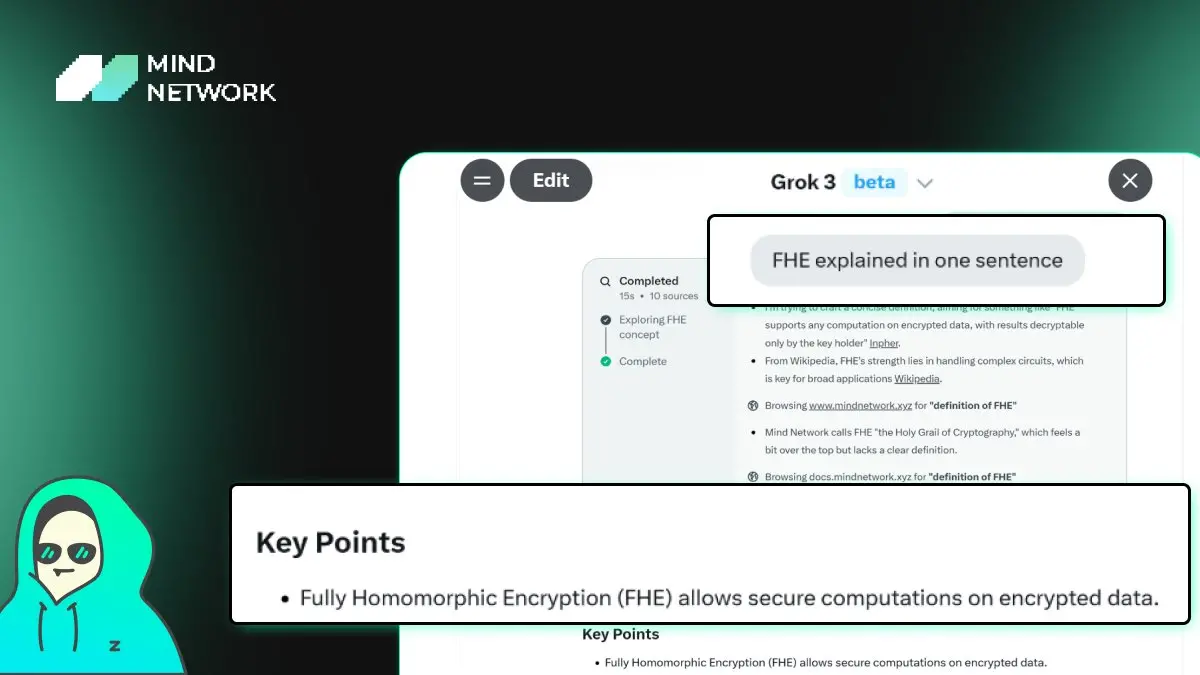

Fully Homomorphic Encryption (FHE): is an advanced encryption technology that allows arbitrary computation to be performed on encrypted data without decrypting it. This means that a third party can perform operations on the ciphertext, and the result obtained after decryption is the same as the result of the same operation on the plaintext. This feature is important for scenarios that require computation without exposing raw data, such as cloud computing and data outsourcing.

The zero trust security model and DID have a certain number of projects in multiple rounds of bull markets, and they have either succeeded or drowned in the wave of encryption, and as the youngest encryption method: Fully Homomorphic Encryption (FHE) is also a big killer to solve the security problems in the AI era. Fully homomorphic encryption (FHE) is a technology that allows computation to be performed on encrypted data.

How to fix it?

First, the data level. All information entered by the user (including biometrics, voice tone) is processed in an encrypted state, and even Manus itself cannot decrypt the original data. For example, in a medical diagnosis case, the patient's genomic data is analysed in ciphertext throughout the process to avoid the leakage of biological information.

Algorithmic level. The "cryptographic model training" realised by FHE makes it impossible for developers to peek into the decision-making path of AI.

At the level of synergy. Threshold encryption is used for multiple agent communications, and a single node will not be breached without causing global data leakage. Even in supply chain attack and defence drills, attackers cannot obtain a complete view of the business after infiltrating multiple agents.

Due to technical limitations, web3 security may not be directly related to most users, but it is inextricably linked to indirect interests, and in this dark forest, if you don't do your best to arm, you will never escape the identity of "leeks".

uPort was launched on the Ethereum mainnet in 2017 and was probably the first decentralised identity (DID) project to be released on the mainnet.

In terms of zero trust security model, NKN released its mainnet in 2019.

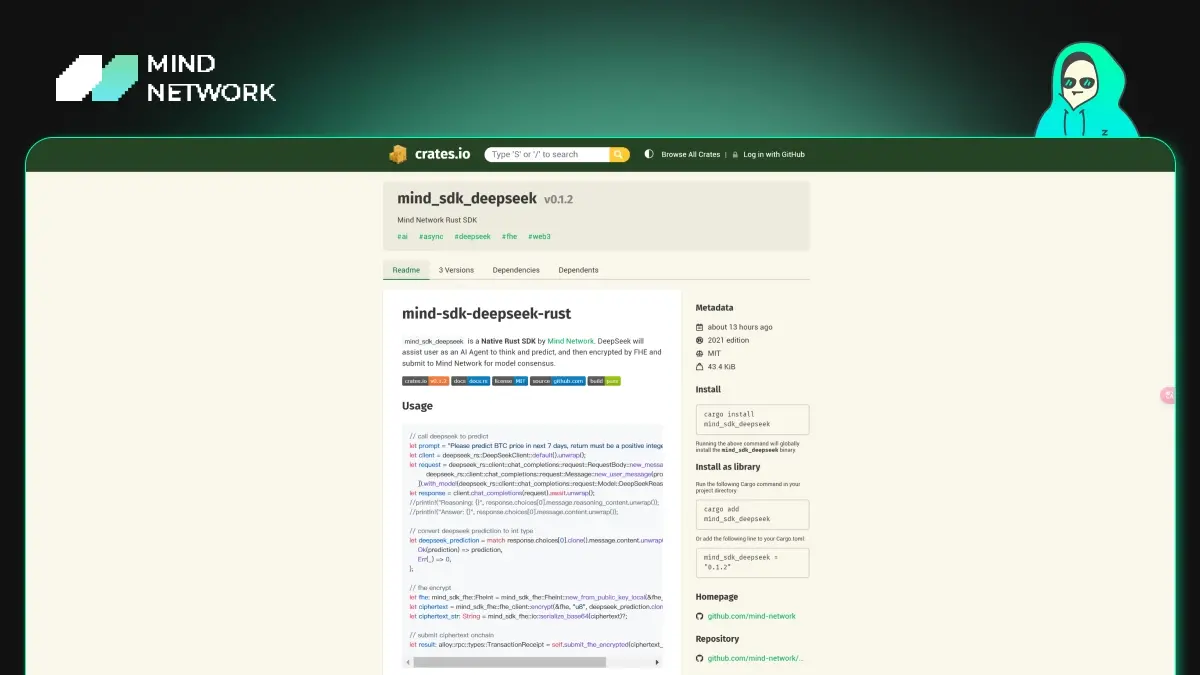

Mind Network is the first FHE project to be launched on the mainnet, and has taken the lead in cooperating with ZAMA, Google, DeepSeek, etc.

uPort and NKN are already projects that I have never heard of, and it seems that security projects are really not concerned by speculators, so let's wait and see if Mind network can escape this curse and become a leader in the security field.

The future is here. The closer AI is to human intelligence, the more it needs non-human defence systems. The value of network information security is not only to solve current problems, but also to pave the way for the era of strong AI. On this steep road to AGI, cyber security is not an option, but a necessity for survival.